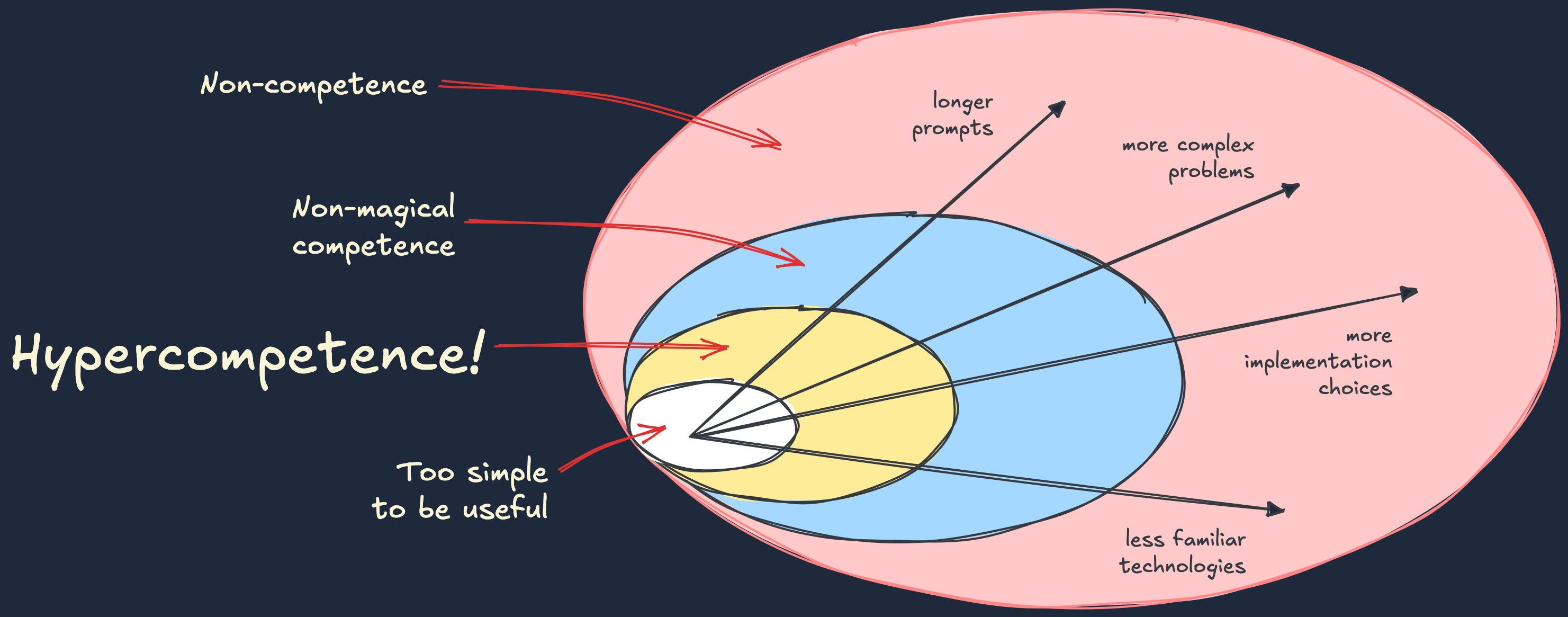

Hypercompetence is my name for when an LLM generates code under ideal conditions, when it operates within the “golden zone”. The ask is complex enough to yield something interesting, but simple enough for the LLM to hit home runs pretty consistently. The diagram below tries to illustrate this on an intuitive level: as the “cognitive load” grows, the LLM does worse.

The dynamic is strikingly similar to managing a junior engineer, in particular when things go poorly. Ask a junior engineer to work on problems that are too complex, or too ambiguous, or too messy, and you do not just slow them down. You stymie them. You kick off a wild goose chase. You send them scrambling down blind allies, unsure of which way is North, unaware of what questions they should be asking. Ask too much of an LLM and you get something similar – a helplessly circular internal monologue to justify moving bugs from one part of the codebase to another.

Where LLMs are different is when things go well. Junior engineers and LLMs will happily grind away at problems that land within their comfort zone, and ultimately deliver you usable code. But LLMs do it so much faster. Days turn into minutes. LLM Hypercompetence is so different from anything that came before, that I believe it will fundamentally change how we build software. And in doing so, it will eventually change the kind of software we choose to build.

As I try to work my way towards an AI-centered software engineering practice, my working hypothesis is: the more Hypercompetence, the better. I maintain the intentionally naive expectation that LLMs should always operate in their Golden Zone, or almost always. And to get there I am happy to explore tradeoffs that are surprising, unconventional, and crazy.